Many voices arise now and then against risks linked to the Github use by Free Software projects. Yet the infatuation for the collaborative forge of the Octocat Californian start-ups doesn t seem to fade away.

These recent years, Github and its services take an important role in software engineering as they are seen as easy to use, efficient for a daily workload with interesting functions in enterprise collaborative workflow or amid a Free Software project. What are the arguments against using its services and are they valid? We will list them first, then we ll examine their validity.

1. Critical points

1.1 Centralization

The Github application belongs to a single entity, Github Inc, a US company which manage it alone. So, a unique company under US legislation manages the access to most of Free Software application code sources, which may be a problem with groups using it when a code source is no longer available, for political or technical reason.

The Octocat, the Github mascot

This centralization leads to another trouble: as it obtained critical mass, it becomes more and more difficult not having a Github account. People who don t use Github, by choice or not, are becoming a silent minority. It is now fashionable to use Github, and not doing so is seen as out of date . The same phenomenon is a classic, and even the norm, for proprietary social networks (Facebook, Twitter, Instagram).

1.2 A Proprietary Software

When you interact with Github, you are using a proprietary software, with no access to its source code and which may not work the way you think it is. It is a problem at different levels. First, ideologically, but foremost in practice. In the Github case, we send them code we can control outside of their interface. We also send them personal information (profile, Github interactions). And mostly, Github forces any project which goes through the US platform to use a crucial proprietary tools: its bug tracking system.

Windows, the epitome of proprietary software, even if others took the same path

1.3 The Uniformization

Working with Github interface seems easy and intuitive to most. Lots of companies now use it as a source repository, and many developers leaving a company find the same Github working environment in the next one. This pervasive presence of Github in free software development environment is a part of the uniformization of said developers working space.

Uniforms always bring Army in my mind, here the Clone army

2 Critical points cross-examination

2.1 Regarding the centralization

2.1.1 Service availability rate

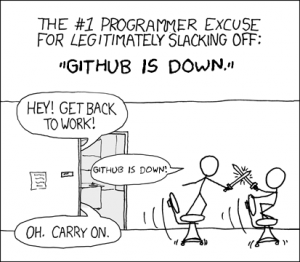

As said above, nowadays, Github is the main repository of Free Software source code. As such it is a favorite target for cyberattacks. DDOS hit it in March and August 2015. On December 15, 2015, an outage led to the inaccessibility of 5% of the repositories. The same occurred on November 15. And these are only the incident reported by Github itself. One can imagine that the mean outage rate of the platform is underestimated.

2.1.2 Chain reaction could block Free Software development

Today many dependency maintenance tools, as npm for javascript, Bundler for Ruby or even pip for Python can access an application source code directly from Github. Free Software projects getting more and more linked and codependents, if one component is down, all the developing process stop.

One of the best examples is the npmgate. Any company could legally demand that Github take down some source code from its repository, which could create a chain reaction and blocking the development of many Free Software projects, as suffered the Node.js community from the decisions of Npm, Inc, the company managing npm.

2.2 A historical precedent: SourceForge

Github didn t appear out of the blue. In his time, its predecessor, SourceForge, was also extremely popular.

Heavily centralized, based on strong interaction with the community, SourceForge is now seen as an aging SAAS (Software As A Service) and sees most of its customers fleeing to Github. Which creates lots of hurdles for those who stayed. The Gimp project suffered from spams and terrible advertising, which led to the departure of the VLC project, then from installers corrupted with adwares instead of the official Gimp installer for Windows. And finally, the Project Gimp s SourceForge account was hacked by SourceForge team itself!

These are very recent examples of what can do a commercial entity when it is under its stakeholders pressure. It is vital to really understand what it means to trust them with data and exchange centralization, where it could have tremendous repercussion on the day-to-day life and the habits of the Free Software and open source community.

2.3. Regarding proprietary software

2.3.1 One community, several opinions on proprietary software

Mostly based on ideology, this point deals with the definition every member of the community gives to Free Software and open source. Mostly about one thing: is it viral or not? Or GPL vs MIT/BSD.

Those on the side of the viral Free Software will have trouble to use a proprietary software as this last one shouldn t even exist. It must be assimilated, to quote Star Trek, as it is a connected black box, endangering privacy, corrupting for profit our uses and restrain our freedom to use as we re pleased what we own, etc.

Those on the side of complete freedom have no qualms using proprietary software as their very existence is a consequence of freedom without restriction. They even agree that code they developed may be a part of proprietary software, which is quite a common occurrence. This part of the Free Software community has no qualm using Github, which is well within their ideology parameters. Just take a look at the Janson amphitheater during Fosdem and check how many Apple laptops running on macOS are around.

FreeBSD, the main BSD project under the BSD license

2.3.2 Data loss and data restrictions linked to proprietary software use

Even without ideological consideration, and just focusing on Github infrastructure, the bug tracking system is a major problem by itself.

Bug report builds the memory of Free Software projects. It is the entrance point for new contributors, the place to find bug reporting, requests for new functions, etc. The project history can t be limited only to the code. It s very common to find bug reports when you copy and paste an error message in a search engine. Not their historical importance is precious for the project itself, but also for its present and future users.

Github gives the ability to extract bug reports through its API. What would happen if Github is down or if the platform doesn t support this feature anymore? In my opinion, not that many projects ever thought of this outcome. How could they move all the data generated by Github into a new bug tracking system?

One old example now is Astrid, a TODO list bought by Yahoo a few years ago. Very popular, it grew fast until it was closed overnight, with only a few weeks for its users to extract their data. It was only a to-do list. The same situation with Github would be tremendously difficult to manage for several projects if they even have the ability to deal with it. Code would still be available and could still live somewhere else, but the project memory would be lost. A project like Debian has today more than 800,000 bug reports, which are a data treasure trove about problems solved, function requests and where the development stand on each. The developers of the Cpython project have anticipated the problem and decided not to use Github bug tracking systems.

Issues, the Github proprietary bug tracking system

Another thing we could lose if Github suddenly disappear: all the work currently done regarding the push requests (aka PRs). This Github function gives the ability to clone one project s Github repository, to modify it to fit your needs, then to offer your own modification to the original repository. The original repository s owner will then review said modification, and if he or she agrees with them will fuse them into the original repository. As such, it s one of the main advantages of Github, since it can be done easily through its graphic interface.

However reviewing all the PRs may be quite long, and most of the successful projects have several ongoing PRs. And this PRs and/or the proprietary bug tracking system are commonly used as a platform for comment and discussion between developers.

Code itself is not lost if Github is down (except one specific situation as seen below), but the peer review works materialized in the PRs and the bug tracking system is lost. Let s remember than the PR mechanism let you clone and modify projects and then generate PRs directly from its proprietary web interface without downloading a single code line on your computer. In this particular case, if Github is down, all the code and the work in progress is lost.

Some also use Github as a bookmark place. They follow their favorite projects activity through the Watch function. This technological watch style of data collection would also be lost if Github is down.

Debian, one of the main Free Software projects with at least a thousand official contributors

2.4 Uniformization

The Free Software community is walking a thigh rope between normalization needed for an easier interoperability between its products and an attraction for novelty led by a strong need for differentiation from what is already there.

Github popularized the use of Git, a great tool now used through various sectors far away from its original programming field. Step by step, Git is now so prominent it s almost impossible to even think to another source control manager, even if awesome alternate solutions, unfortunately not as popular, exist as Mercurial.

A new Free Software project is now a Git repository on Github with README.md added as a quick description. All the other solutions are ostracized? How? None or very few potential contributors would notice said projects. It seems very difficult now to encourage potential contributors into learning a new source control manager AND a new forge for every project they want to contribute. Which was a basic requirement a few years ago.

It s quite sad because Github, offering an original experience to its users, cut them out of a whole possibility realm. Maybe Github is one of the best web versioning control systems. But being the main one doesn t let room for a new competitor to grow. And it let Github initiate development newcomers into a narrow function set, totally unrelated to the strength of the Git tool itself.

3. Centralization, uniformization, proprietary software What s next? Laziness?

Fight against centralization is a main part of the Free Software ideology as centralization strengthens the power of those who manage it and who through it control those who are managed by it. Uniformization allergies born against main software companies and their wishes to impose a closed commercial software world was for a long time the main fuel for innovation thirst and intelligent alternative development. As we said above, part of the Free Software community was built as a reaction to proprietary software and their threat. The other part, without hoping for their disappearance, still chose a development model opposite to proprietary software, at least in the beginning, as now there s more and more bridges between the two.

The Github effect is a morbid one because of its consequences: at least centralization, uniformization, proprietary software usage as their bug tracking system. But some years ago the Dear Github buzz showed one more side effect, one I ve never thought about: laziness. For those who don t know what it is about, this letter is a complaint from several spokespersons from several Free Software projects which demand to Github team to finally implement, after years of polite asking, new functions.

Since when Free Software project facing a roadblock request for clemency and don t build themselves the path they need? When Torvalds was involved in the Bitkeeper problem and the Linux kernel development team couldn t use anymore their revision control software, he developed Git. The mere fact of not being able to use one tool or functions lacking is the main motivation to seek alternative solutions and, as such, of the Free Software movement. Every Free Software community member able to code should have this reflex. You don t like what Github offers? Switch to Gitlab. You don t like it Gitlab? Improve it or make your own solution.

The Gitlab logo

Let s be crystal clear. I ve never said that every Free Software developers blocked should code his or her own alternative. We all have our own priorities, and some of us even like their beauty sleep, including me. But, to see that this open letter to Github has 1340 names attached to it, among them some spokespersons for major Free Software project showed me that need, willpower and strength to code a replacement are here. Maybe said replacement will be born from this letter, it would be the best outcome of this buzz.

In the end, Github usage is just another example of Internet usage massification. As Internet users are bound to go to massively centralized social network as Facebook or Twitter, developers are following the same path with Github. Even if a large fraction of developers realize the threat linked this centralized and proprietary organization, the whole community is following this centralization and uniformization trend. Github service is useful, free or with a reasonable price (depending on the functions you need) easy to use and up most of the time. Why would we try something else? Maybe because others are using us while we are savoring the convenience? The Free Software community seems to be quite sleepy to me.

The lion enjoying the hearth warm

About Me

Carl Chenet, Free Software Indie Hacker, founder of the French-speaking Hacker News-like

Journal du hacker.

Follow me on social networks

Translated from French by

St phanie Chaptal. Original article written in 2015.

Welcome to gambaru.de. Here is my monthly report (+ the first week in October) that covers what I have been doing for Debian. If you re interested in Java, Games and LTS topics, this might be interesting for you.

Debian Games

Welcome to gambaru.de. Here is my monthly report (+ the first week in October) that covers what I have been doing for Debian. If you re interested in Java, Games and LTS topics, this might be interesting for you.

Debian Games

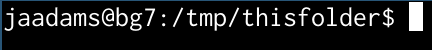

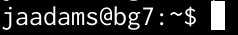

This line is called a command prompt and it tells you four pieces of information:

This line is called a command prompt and it tells you four pieces of information:

This is a shorthand notation that the shell uses to make this output shorter

when possible.

This is a shorthand notation that the shell uses to make this output shorter

when possible.  actually tells you that you are currently in the

actually tells you that you are currently in the

Continuing a series of blog posts about casual

Continuing a series of blog posts about casual

Everywhere I worked in the past, the only feedback that was asked of

employees was during a yearly evaluation meeting. These meetings always felt to me

like talking to Santa Claus and his

Everywhere I worked in the past, the only feedback that was asked of

employees was during a yearly evaluation meeting. These meetings always felt to me

like talking to Santa Claus and his

Dear lazyweb,

I am trying to get a good way to present the categorization of several cases studied with a fitting graph. I am rating several vulnerabilities / failures according to James Cebula et. al.'s paper,

Dear lazyweb,

I am trying to get a good way to present the categorization of several cases studied with a fitting graph. I am rating several vulnerabilities / failures according to James Cebula et. al.'s paper,

This post shows how to patch an external dependency for an

Android project at build-time with

This post shows how to patch an external dependency for an

Android project at build-time with